File Download (cURL)

Download a file using curl ("command line tool and library for transferring data with URLs").

Steps:

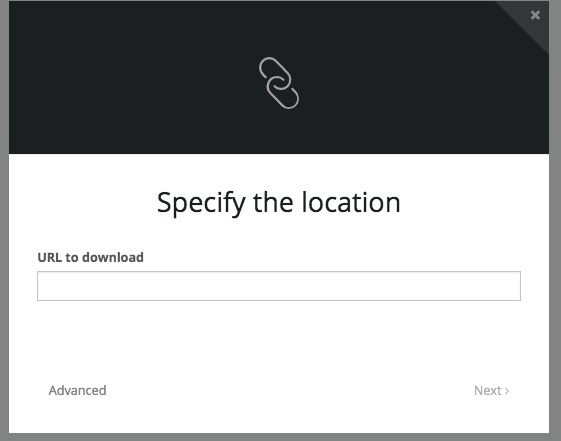

Create a job using the "File Download" connector:

Default job setup:

URL to download:

Put in the URL of the file you wish to download.

curl Arguments

Click "Advanced" to add curl arguments.

These are the default arguments:

-s -b /tmp/cookies -L -O -f

You can add to these arguments as necessary.

Explanation of curl Arguments

https://curl.haxx.se/docs/manpage.html

-b, --cookie <data>

https://curl.haxx.se/docs/manpage.html#-b

(HTTP) Pass the data to the HTTP server in the Cookie header. It is supposedly the data previously received from the server in a "Set-Cookie:" line. The data should be in the format "NAME1=VALUE1; NAME2=VALUE2".

If no '=' symbol is used in the argument, it is instead treated as a filename to read previously stored cookie from. This option also activates the cookie engine which will make curl record incoming cookies, which may be handy if you're using this in combination with the -L, --location option or do multiple URL transfers on the same invoke. If the file name is exactly a minus ("-"), curl will instead the contents from stdin.

The file format of the file to read cookies from should be plain HTTP headers (Set-Cookie style) or the Netscape/Mozilla cookie file format.

The file specified with -b, --cookie is only used as input. No cookies will be written to the file. To store cookies, use the -c, --cookie-jar option.

Exercise caution if you are using this option and multiple transfers may occur. If you use the NAME1=VALUE1; format, or in a file use the Set-Cookie format and don't specify a domain, then the cookie is sent for any domain (even after redirects are followed) and cannot be modified by a server-set cookie. If the cookie engine is enabled and a server sets a cookie of the same name then both will be sent on a future transfer to that server, likely not what you intended. To address these issues set a domain in Set-Cookie (doing that will include sub domains) or use the Netscape format.

If this option is used several times, the last one will be used.

Users very often want to both read cookies from a file and write updated cookies back to a file, so using both -b, --cookie and -c, --cookie-jar in the same command line is common.

-f, --fail

https://curl.haxx.se/docs/manpage.html#-f

(HTTP) Fail silently (no output at all) on server errors. This is mostly done to better enable scripts etc to better deal with failed attempts. In normal cases when an HTTP server fails to deliver a document, it returns an HTML document stating so (which often also describes why and more). This flag will prevent curl from outputting that and return error 22.

This method is not fail-safe and there are occasions where non-successful response codes will slip through, especially when authentication is involved (response codes 401 and 407).

-s, --silent

https://curl.haxx.se/docs/manpage.html#-s

Silent or quiet mode. Don't show progress meter or error messages. Makes Curl mute. It will still output the data you ask for, potentially even to the terminal/stdout unless you redirect it.

Use -S, --show-error in addition to this option to disable progress meter but still show error messages.

See also -v, --verbose and --stderr.

-L, --location

https://curl.haxx.se/docs/manpage.html#-L

(HTTP) If the server reports that the requested page has moved to a different location (indicated with a Location: header and a 3XX response code), this option will make curl redo the request on the new place. If used together with -i, --include or -I, --head, headers from all requested pages will be shown. When authentication is used, curl only sends its credentials to the initial host. If a redirect takes curl to a different host, it won't be able to intercept the user+password. See also --location-trusted on how to change this. You can limit the amount of redirects to follow by using the --max-redirs option.

When curl follows a redirect and the request is not a plain GET (for example POST or PUT), it will do the following request with a GET if the HTTP response was 301, 302, or 303. If the response code was any other 3xx code, curl will re-send the following request using the same unmodified method.

You can tell curl to not change the non-GET request method to GET after a 30x response by using the dedicated options for that: --post301, --post302 and --post303.

-o, --output <file>

https://curl.haxx.se/docs/manpage.html#-o

Write output to <file> instead of stdout. If you are using {} or [] to fetch multiple documents, you can use '#' followed by a number in the <file> specifier. That variable will be replaced with the current string for the URL being fetched. Like in:

curl http://{one,two}.example.com -o "file_#1.txt"

or use several variables like:

curl http://{site,host}.host[1-5].com -o "#1_#2"

You may use this option as many times as the number of URLs you have. For example, if you specify two URLs on the same command line, you can use it like this:

curl -o aa example.com -o bb example.net

and the order of the -o options and the URLs doesn't matter, just that the first -o is for the first URL and so on, so the above command line can also be written as

curl example.com example.net -o aa -o bb

See also the --create-dirs option to create the local directories dynamically. Specifying the output as '-' (a single dash) will force the output to be done to stdout.

See also -O, --remote-name and --remote-name-all and -J, --remote-header-name.

-O, --remote-name

https://curl.haxx.se/docs/manpage.html#-O

Write output to a local file named like the remote file we get. (Only the file part of the remote file is used, the path is cut off.)

The file will be saved in the current working directory. If you want the file saved in a different directory, make sure you change the current working directory before invoking curl with this option.

The remote file name to use for saving is extracted from the given URL, nothing else, and if it already exists it will be overwritten. If you want the server to be able to choose the file name refer to -J, --remote-header-name which can be used in addition to this option. If the server chooses a file name and that name already exists it will not be overwritten.

There is no URL decoding done on the file name. If it has %20 or other URL encoded parts of the name, they will end up as-is as file name.

You may use this option as many times as the number of URLs you have.

-u, --user <user:password>

https://curl.haxx.se/docs/manpage.html#-u

Specify the user name and password to use for server authentication. Overrides -n, --netrc and --netrc-optional.

If you simply specify the user name, curl will prompt for a password.

The user name and passwords are split up on the first colon, which makes it impossible to use a colon in the user name with this option. The password can, still.

When using Kerberos V5 with a Windows based server you should include the Windows domain name in the user name, in order for the server to successfully obtain a Kerberos Ticket. If you don't then the initial authentication handshake may fail.

When using NTLM, the user name can be specified simply as the user name, without the domain, if there is a single domain and forest in your setup for example.

To specify the domain name use either Down-Level Logon Name or UPN (User Principal Name) formats. For example, EXAMPLE\user and user@example.com respectively.

If you use a Windows SSPI-enabled curl binary and perform Kerberos V5, Negotiate, NTLM or Digest authentication then you can tell curl to select the user name and password from your environment by specifying a single colon with this option: "-u :".

If this option is used several times, the last one will be used.